You have not yet added any article to your bookmarks!

Join 10k+ people to get notified about new posts, news and tips.

Do not worry we don't spam!

Post by : Anis Farhan

The AI Action Summit 2025 in Paris was not just another technology conference; it was a landmark in the global conversation about the future of artificial intelligence. Bringing together ministers, tech leaders, researchers, and policymakers from nearly sixty nations, the summit underscored both the immense potential of AI and the equally immense risks it carries. The highlight of the gathering was a unified pledge from 58 countries to establish clear, binding ethical standards for AI use across government, industry, and society.

Yet, the story did not end there. The conspicuous absence of two of the world’s AI powerhouses—the United States and the United Kingdom—cast a long shadow over what might otherwise have been a fully celebratory moment. Their hesitation revealed deepening fault lines in how nations envision the rules that should govern artificial intelligence, a technology that is rapidly shaping economies, national security strategies, and social systems.

The participating nations agreed on a framework that prioritizes three pillars: transparency, accountability, and inclusivity. Transparency refers to the need for AI systems to be explainable to users, regulators, and citizens. Accountability emphasizes that governments and companies should remain responsible for the outcomes of their AI tools, rather than shifting blame to the technology itself. Inclusivity highlights the importance of ensuring that AI systems do not reinforce inequalities but instead serve all communities fairly.

The pledge also called for strict measures to combat deepfake misinformation, AI-driven discrimination, and opaque algorithmic decision-making. Countries committed to creating international watchdog bodies that can monitor AI deployments, much like the International Atomic Energy Agency oversees nuclear programs. While such plans are still in their infancy, the symbolism of 58 countries finding common ground sent a powerful message: the world does not want to wait for AI’s risks to spiral before acting.

The refusal of the United States and the United Kingdom to sign on immediately drew widespread attention. Both countries argued that the proposed framework was too restrictive and could stifle innovation. American tech giants, from Silicon Valley to Seattle, had lobbied their government intensely, warning that strict international oversight might slow down development and leave them vulnerable to competition from China.

The UK’s hesitation stemmed from similar concerns, but also from its post-Brexit strategy of positioning itself as a hub for AI entrepreneurship and investment. For London, binding international commitments could be perceived as undermining its flexibility to attract global firms with looser rules.

Critics, however, see their refusal as a missed opportunity. By staying out of the agreement, the US and UK risk appearing as obstacles to responsible governance. Some analysts even argue that this choice could weaken their moral authority when criticizing authoritarian states for unethical uses of AI, such as mass surveillance.

Another major highlight of the summit was China’s participation. Often criticized for its domestic use of AI in surveillance and censorship, Beijing surprised observers by supporting the Paris pledge. Analysts interpret this move as a strategic calculation. By aligning itself with a global consensus on AI ethics, China signals its willingness to be seen as a responsible leader in emerging technologies—especially at a time when Western unity is fractured.

For many countries in Africa, Asia, and Latin America, China’s signature carried extra weight, as they rely heavily on Chinese technology exports. The gesture reassured them that adopting Chinese AI products would not necessarily mean turning a blind eye to ethical standards. Still, skeptics question whether China’s commitment will hold once it comes into tension with its domestic priorities.

The stakes of AI governance extend far beyond abstract debates about ethics. From healthcare algorithms that guide treatment decisions to AI-powered drones on the battlefield, the technology is already entangled in life-and-death matters. Without strong regulation, experts warn of scenarios where biased data leads to wrongful arrests, financial exclusion, or systemic discrimination.

Equally worrying is the rise of deepfake technology. Already, doctored videos and AI-generated voices are being used to spread misinformation in elections worldwide. If left unchecked, such tools could destabilize democracies by eroding trust in media and institutions.

By endorsing shared ethical standards, the 58 countries hope to create a buffer against these dangers. The framework signals to companies that there will be international scrutiny if they misuse AI for profit at the expense of society. It also encourages cross-border collaboration in research, allowing countries to share best practices and hold each other accountable.

One of the enduring tensions at the summit was the classic debate between innovation and regulation. Proponents of looser rules argue that the faster AI advances, the more benefits society can reap—new medical breakthroughs, smarter infrastructure, and economic growth. Heavy-handed oversight, they fear, could slow down this progress.

On the other hand, advocates for regulation stress that history is filled with cautionary tales where unchecked innovation created disasters, from the financial crisis to environmental destruction. AI, they argue, is too powerful and too embedded in daily life to be left unregulated. Striking a balance will be the challenge for years to come, and the Paris summit may mark the first serious attempt to chart a middle path.

The European Union emerged as a leading force behind the summit’s outcomes. With its AI Act already considered the most comprehensive regulatory framework in the world, the EU used its influence to push for international adoption. European officials emphasized that just as environmental and human rights standards cannot be left to voluntary goodwill, AI governance requires binding agreements.

This approach has won admirers and critics alike. Supporters argue that the EU is setting the gold standard for responsible innovation. Critics warn that if rules are too rigid, smaller firms may find it impossible to compete with tech giants who can absorb compliance costs. Nonetheless, the EU’s persistence has given it a central role in shaping the global AI narrative.

For developing nations, the summit was both a promise and a challenge. Countries in Africa, South Asia, and Latin America welcomed the emphasis on inclusivity, as AI has the potential to either bridge or deepen global inequalities. For instance, AI-driven education tools could revolutionize access to learning in remote villages, but only if they are made affordable and culturally relevant.

At the same time, leaders from these regions voiced concerns about “digital colonialism,” where rich nations dictate the rules and leave poorer ones to simply comply. They called for financial support, capacity building, and fair partnerships to ensure that the benefits of AI are distributed equitably. Without this, global standards might unintentionally reinforce the very inequalities they seek to eliminate.

As the summit concluded, it became clear that the Paris agreement was just the beginning. Drafting a set of principles is one thing; enforcing them across different political, cultural, and economic systems is another. Many observers expect future tensions as countries interpret the pledge differently. Some may prioritize privacy, while others focus on national security. Some may demand strict oversight, while others quietly permit more flexibility at home.

For the US and UK, pressure is likely to mount. If the Paris framework gains traction, they may face isolation from allies and partners who embrace the ethical standards. Conversely, if innovation thrives in their freer markets, they could argue that their caution was justified.

The next few years will reveal which path yields greater trust, prosperity, and technological leadership. What is certain, however, is that AI has officially entered the world stage not just as a technological challenge, but as a geopolitical one.

The AI Action Summit of 2025 will be remembered as a turning point. By bringing together 58 nations under a common banner of ethical responsibility, it highlighted both the promise and peril of artificial intelligence. Yet, the refusal of the US and UK to participate reminds us that technology is never just about machines—it is about power, politics, and the values societies choose to uphold.

As the world watches the ripple effects of the Paris pledge, one question looms large: Will the global community find the unity needed to steer AI toward humanity’s best interests, or will divisions leave the future of technology vulnerable to competing agendas?

This article is intended for informational purposes only. The perspectives expressed are based on available reports and analyses and do not represent the official stance of Newsible Asia. Readers are encouraged to seek additional sources for a more comprehensive understanding of the topic.

Rashmika Mandanna, Vijay Deverakonda Set to Marry on Feb 26

Rashmika Mandanna and Vijay Deverakonda are reportedly set to marry on February 26, 2026, in a priva

FIFA Stands by 2026 World Cup Ticket Prices Despite Fan Criticism

FIFA defends the high ticket prices for the 2026 World Cup, introducing a $60 tier to make matches m

Trump Claims He Ended India-Pakistan War, Faces Strong Denial

Donald Trump says he brokered the ceasefire between India and Pakistan and resolved eight wars, but

Two Telangana Women Die in California Road Accident, Families Seek Help

Two Telangana women pursuing Master's in the US died in a tragic California crash. Families urge gov

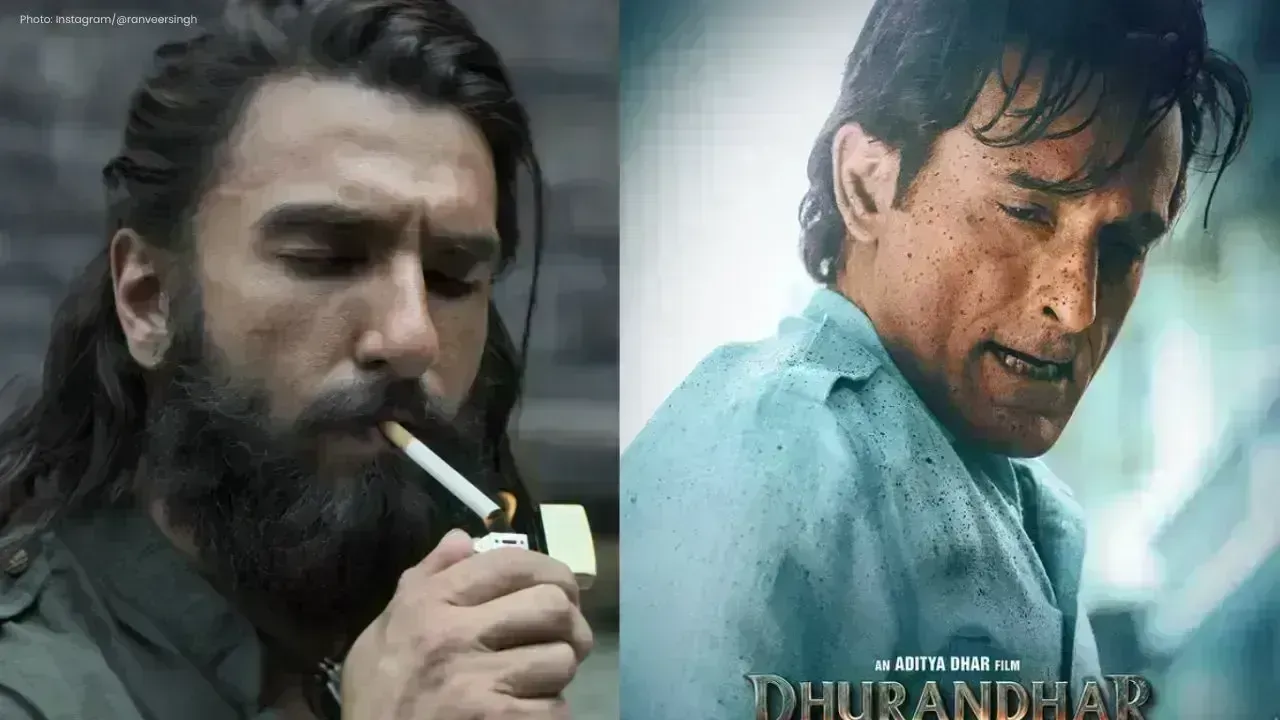

Ranveer Singh’s Dhurandhar Roars Past ₹1100 Cr Worldwide

Ranveer Singh’s Dhurandhar stays unstoppable in week four, crossing ₹1100 crore globally and overtak

Asian Stocks Surge as Dollar Dips, Silver Hits $80 Amid Rate Cut Hopes

Asian markets rally to six-week highs while silver breaks $80, driven by Federal Reserve rate cut ex