You have not yet added any article to your bookmarks!

Join 10k+ people to get notified about new posts, news and tips.

Do not worry we don't spam!

Post by : Anis Farhan

For decades, a phone call meant one thing: a real human voice speaking from the other end. Even in automated systems, there was always a limit to how “human” a machine could sound. That line is now disappearing.

Today, voices can be generated artificially with breath, emotion, hesitation, accents, and tone all carefully replicated. A machine can now speak in a voice that sounds warm, tired, friendly, or concerned. It can mimic a regional accent. It can copy a real person’s voice perfectly.

And it is already entering customer service.

When you receive a reminder call, a service update, or a support verification request, the question you never had to ask now becomes important:

Is this a person… or a program?

AI voice cloning refers to software that can reproduce a human voice using artificial intelligence. Given enough voice data, a system can recreate someone’s speech pattern, emotional range, and pronunciation style with surprising accuracy.

The technology does not “record and replay.” It synthesises speech from text by building a digital replica of voice behaviour.

Instead of playing a recording, it generates speech in real time.

That difference is critical.

Real-time generation allows:

Dynamic responses

Conversation memory

Emotional tone shifts

Language changes

Continuous interaction

You are no longer listening to a recorded robot.

You are interacting with a voice simulation.

The system breaks down how a person speaks into mathematical patterns. It learns:

Pitch and tone

Speed and rhythm

Emphasis patterns

Silence gaps

Stress reactions

Emotional fluctuations

Once trained, the AI can recreate that voice saying things the original speaker never said.

And it can do so convincingly.

Customer support is expensive.

Companies spend massive amounts on call centres, training, infrastructure, and human resources. AI voice systems promise to reduce that cost dramatically.

Companies turn to voice clones because they:

Scale instantly

Never sleep

Don’t demand wages or breaks

Never get angry

Respond safely to scripts

Handle multiple calls at once

Don’t suffer fatigue

From a business perspective, it feels like the perfect solution.

From a human perspective, it is unsettling.

Basic robotic systems frustrate customers.

Press one. Press two. Repeat.

Voice cloning feels conversational.

It handles:

Natural questions

Complaints

Unscripted input

Language switching

Emotional reaction

That improvement leads companies to deploy it aggressively.

The voice sounds human.

The patience feels human.

The speed is inhuman.

The reason voice cloning works is the same reason it is risky.

Humans trust voices.

A familiar voice lowers psychological defences.

A calm tone increases compliance.

A friendly accent increases comfort.

When a machine speaks like a person, the listener lowers suspicion.

And in customer service, people already expect authority.

When the voice says it is “calling from your bank” or “updating your delivery” — trust activates automatically.

Visually, people learn to identify fake images.

Auditory deception is harder.

The brain evolved to trust spoken language.

When we hear:

Breath patterns

Emotional inflection

Natural pauses

we assume authenticity.

Voice cloning exploits a deep neurological shortcut.

It feels real because nature trained us to believe it is.

Criminals do not wait for regulation.

They use innovation faster than governments can react.

Voice cloning scams are rising globally.

Scam calls now:

Mimic family members

Impersonate company staff

Copy managers’ voices

Simulate officials

Fake emergency situations

A scammer may clone a loved one’s voice and call with a crisis story.

A victim hears familiarity.

Panic overrides logic.

Money transfers quickly.

The deception succeeds.

Texts can be questioned.

Emails can be examined.

Voices are personal.

When someone calls sounding like your brother, mother, or colleague, doubt disappears.

Fear enters.

Voice cloning attacks emotions directly.

Companies argue that voice cloning improves service.

And sometimes, it does.

It can:

Reduce waiting times

Improve multilingual support

Maintain consistent service

Operate 24/7

Handle high call volumes

But emotion cannot be automated safely.

An algorithm does not understand genuine distress.

It reacts according to data.

That difference matters.

Human agents notice:

Tone changes

Hesitation

Confusion

Fear

Emotional breakdown

AI processes the words.

Not the soul.

In emergencies, nuance saves lives.

Automated voices can fail there.

Customer service rests on one foundation:

Trust.

When voices are fabricated, that trust weakens.

If customers cannot tell:

Who is real

Who represents a company

Who may be a fraud

then the phone becomes hostile terrain.

People stop answering calls.

Support lines lose credibility.

Even legitimate companies become suspect.

Widespread voice cloning may erode public confidence in voice communication itself.

Voice cloning lives in legal grey areas.

The questions are harsh:

Who owns a person’s voice?

Can it be used without permission?

Who is responsible for harm caused by cloned speech?

How do you prove misuse?

How do you claim identity theft when identity is synthetic?

Law moves slowly.

Technology moves fast.

Victims wait in between.

Previously, identity theft involved documents.

Now it involves voice.

Your voice is now a password.

And it can be stolen without your knowledge.

Trust in communication is collapsing.

People are becoming suspicious of:

Unknown callers

Automated messages

Recorded warnings

Digital voices

Communication anxiety is increasing.

The phone no longer feels safe.

The moment a voice sounds “too perfect,” discomfort follows.

Society is quietly developing digital paranoia.

Voice cloning is not evil.

It is powerful.

Like all power, it needs boundaries.

Ethical deployment requires:

Consent from voice owners

Clear disclosure to consumers

No impersonation

Strong fraud prevention

Transparent policies

User opt-out rights

If a voice is artificial, users must be told.

Silence is deception.

When companies announce:

“This call uses synthetic voice technology for assistance,”

trust remains intact.

It is secrecy that damages credibility.

People must now treat phone calls with caution.

Practical steps include:

Never trusting urgent demands over phone

Confirming requests through official apps

Hanging up and calling back using known numbers

Creating family verification codes

Never sharing OTPs or account details

Avoiding emotional reaction during calls

Questioning any financial urgency

The era of blind trust is over.

Most people do not understand how advanced AI voices are.

Schools don’t teach it.

Workplaces don’t explain it.

Families remain unaware.

This knowledge gap is dangerous.

Digital literacy must now include audio awareness.

Not just internet safety.

Regulation is slow.

But harm is fast.

Authorities must:

Criminalise identity voice cloning

Enforce disclosure rules

Penalise misuse

Demand authentication standards

Establish consent frameworks

Technology without law becomes chaos.

And voice is too intimate to leave unprotected.

Internal communication will not be immune.

Voice cloning may be used for:

Meeting summaries

Training modules

Instructional calls

Customer messaging

But also exploited for:

Fake managerial orders

Internal fraud

Impersonation

Corporate manipulation

Organisations must secure voice approval systems the same way they secure passwords.

Possibly.

Humans adapt.

But acceptance will come with discomfort.

People may grow used to not knowing if a voice is real.

That is not progress.

That is loss of certainty.

Technology should improve life.

Not confuse it.

Voice cloning can help:

Disabled individuals

Elder support systems

Language access

Emergency management

It can also:

Destroy identity

Manipulate trust

Commit fraud

Spread fear

The tool itself is neutral.

Its usage is not.

The world is not prepared.

Not legally.

Not socially.

Not emotionally.

Voice cloning arrived without warning.

It entered quietly.

It disguised itself as convenience.

But behind comfort hides consequence.

Until strong safeguards exist, trust will continue to erode.

And when trust disappears…

Communication breaks down.

Voice cloning may be one of the most important technology debates of this decade.

Because when humans can no longer trust voices…

What remains sacred?

DISCLAIMER

This article is intended for educational and informational purposes only. It does not provide legal, cybersecurity, or technical advice. Readers are advised to seek professional guidance for security-related decisions and stay updated with regulations regarding artificial intelligence technologies.

Kim Jong Un Celebrates New Year in Pyongyang with Daughter Ju Ae

Kim Jong Un celebrates New Year in Pyongyang with fireworks, patriotic shows, and his daughter Ju Ae

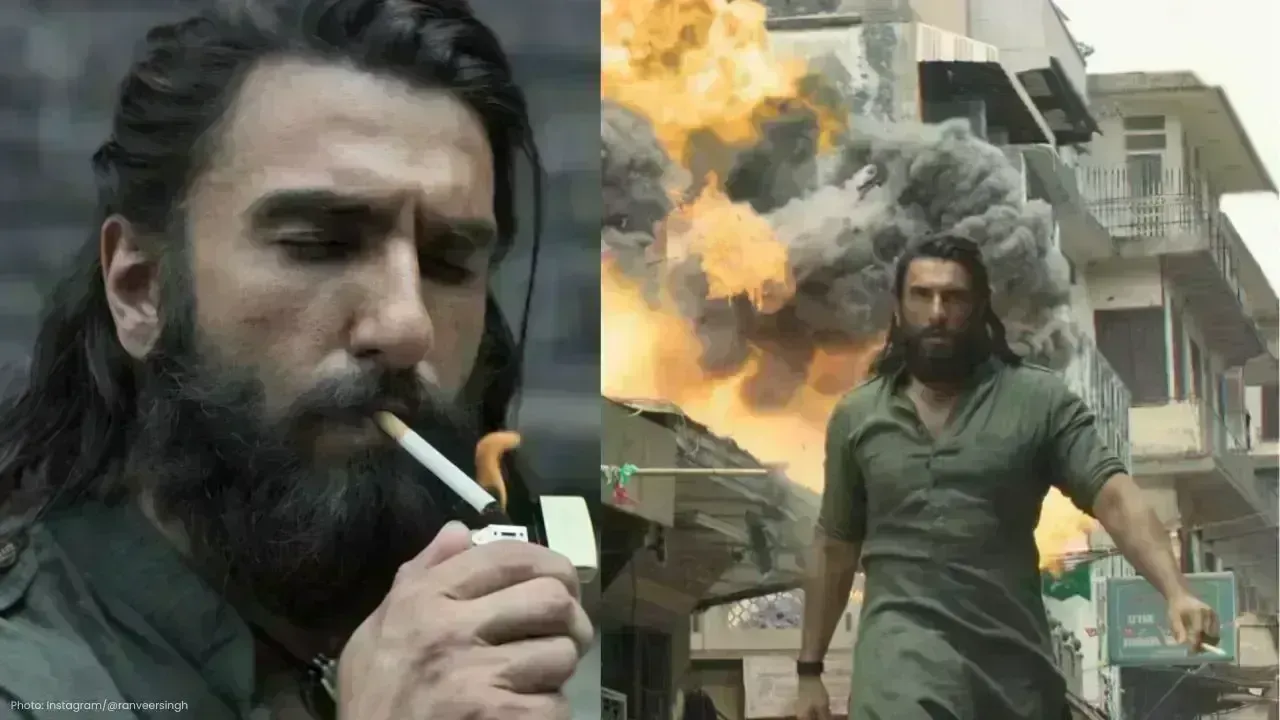

Dhurandhar Day 27 Box Office: Ranveer Singh’s Spy Thriller Soars Big

Dhurandhar earns ₹1117 crore worldwide by day 27, becoming one of 2026’s biggest hits. Ranveer Singh

Hong Kong Welcomes 2026 Without Fireworks After Deadly Fire

Hong Kong rang in 2026 without fireworks for the first time in years, choosing light shows and music

Ranveer Singh’s Dhurandhar Hits ₹1000 Cr Despite Gulf Ban Loss

Dhurandhar crosses ₹1000 crore globally but loses $10M as Gulf nations ban the film. Fans in holiday

China Claims India-Pakistan Peace Role Amid India’s Firm Denial

China claims to have mediated peace between India and Pakistan, but India rejects third-party involv

Mel Gibson and Rosalind Ross Split After Nearly a Decade Together

Mel Gibson and Rosalind Ross confirm split after nearly a year. They will continue co-parenting thei