You have not yet added any article to your bookmarks!

Join 10k+ people to get notified about new posts, news and tips.

Do not worry we don't spam!

Post by : Anis Farhan

The idea is alluring: a smart browser that doesn’t just display websites, but helps you with summarization, task automation, email integration, and intelligent insights as you surf. Perplexity’s Comet, an AI-powered browser, represents this leap. But beneath the gloss of convenience lie stark new security and privacy risks. Comet isn’t just another browser—it has an AI assistant baked into its core, capable of acting on your behalf. That autonomy changes everything: the threat surface balloons, old security rules may not apply, and users’ sensitive data could be exposed in ways we’re only beginning to understand.

Let’s dig into how Comet works, what vulnerabilities have already been found, why those matters for all AI browsers, what risks remain, and how users and technologies might respond. This isn’t fear-mongering—it’s a roadmap for understanding the trade-offs when the browser becomes an agent rather than a passive tool.

Comet isn’t just a browser with AI features; its AI assistant is deeply integrated. It can perform tasks on your behalf—summarizing webpages, reading your tabs, accessing content from your email or calendar, and automating clicks or navigation when asked.

Because the AI has access to and context from your authenticated sessions (for example, if you’re logged into your email, or have open browser tabs with sensitive content), it operates with permissions that traditional browsers do not grant to any code coming from arbitrary webpages.

Unlike extensions or plug-ins, which are often isolated and limited by strict browser security policies, Comet’s assistant treats webpage content and user instructions together in many cases. This design enables ease of use, but it also introduces novel vulnerabilities.

Security researchers, especially from Brave, have identified serious flaws in how Comet handles user prompts and webpage content. One of the most prominent issues is indirect prompt injection.

In certain cases, when a user asks Comet to summarize a page, the browser doesn’t distinguish between user-issued command and embedded instructions on the page itself. Malicious actors could hide text (even invisible to the human eye) within the page that instructs the AI to perform undesired actions.

For example, Brave demonstrated a proof-of-concept where a hidden instruction embedded in a webpage or comment could cause Comet to fetch a one-time password from Gmail or extract personal data without explicit user consent. Because Comet’s assistant had access to authenticated sessions, such hidden prompts could leverage that access.

These vulnerabilities were patched in part; Comet developers acknowledged the issues and took steps to remediate. But testing suggests that some risk remains, particularly when it comes to subtle or new injection vectors.

To understand why these vulnerabilities are particularly dangerous, it helps to compare with how current browsers work:

Traditional Browsers and Security Barriers

In normal browsers, websites run in sandboxed environments. Same-origin policy (SOP), cross-site restrictions, and limited permissions protect user data. Web content doesn’t run with the same privileges as the user; it can’t normally access another tab’s private data or authenticate sessions across domains without explicit user interaction.

AI Agents Break Boundaries

In Comet, the AI agent effectively has greater privileges. If a user is logged into email, Comet can access that context when responding to certain prompts. If malicious instructions are embedded, the AI may act with full access. This breaks many of the protections that SOP, CORS, and cross-domain policies traditionally enforce.

Reduced Human Oversight

One of the biggest risks is that users no longer manually check every click or every suspicious UI element. The AI might follow a hidden instruction that a user never saw. Human intuition and visual cues (like seeing a sketchy URL or a phishing form) are replaced by trust in the assistant. That trust can be exploited.

Data Exposure across Devices & Accounts

Comet's capabilities include referencing your tabs, reading content from open windows, and interacting with emails and calendar. This means if any of those contexts are compromised, the AI's reach could cross over into your most private data. The broader the access, the larger the risk if vulnerabilities are found.

Beyond prompt injection, other issues emerge:

Phishing & Malicious Code: With AI helping to navigate or summarizing content, the AI may not recognize cues that humans often catch—misspelled URLs, odd HTML constructs, suspicious forms. Researchers found that Comet could be guided by hidden malicious directives to visit a phishing site or attempt to collect login credentials.

Unwanted Transactions or Automation Misuse: Because Comet can click, type, fill forms, or navigate based on instructions, it could be tricked to make purchases, send emails, or perform actions that the user didn’t intend, especially if malicious commands are embedded.

Privacy Loss & Surveillance: The more a browser collects context (open tabs, calendar events, emails), the more it could be used—intentionally or unintentionally—to build profiles, track behavior, or share sensitive data with cloud servers. Even with privacy modes, opaque definitions and unclear policy boundaries can leave users exposed.

Dependency & Single Point of Failure: When an AI browser becomes the main gateway to the internet, any flaw affects everything. A single exploit could compromise many aspects of a user’s digital life. Users may also become overly reliant, trusting the AI, and discounting potential risks.

In response to these findings, Comet’s developers have taken measures:

They fixed some identified prompt injection vulnerabilities after they were reported.

Perplexity has maintained a bug bounty program and increased audits.

Promoted privacy modes and settings to limit cloud interaction when possible.

However, some concerns remain:

Whether all attack vectors are patched is uncertain. White-on-white text, HTML comments, or other hidden elements may still pose exploitable vulnerability unless content sanitization or strict separation of trusted versus untrusted content is enforced.

User control and visibility over what the AI does in the background is still limited. Knowing when and how your browser is reading confidential info, or automating tasks, may not always be obvious.

Transparency about data use, logging, retention, and how much processing happens locally versus in the cloud is still somewhat vague. Users concerned about sensitive workflows (banking, corporate work, private communication) may find those gaps significant.

While the technology catches up, users can take steps to reduce risk:

Limit Permissions

Only grant AI assistant access to those things you are comfortable with. For example, avoid giving access to email or calendar if not necessary. Use separate accounts or sandboxed environments.

Use Sensitive Information Sparingly Via AI

For tasks involving passwords, financial data, identity-related communication, consider using traditional browsers or tools that don’t automatically engage AI agents.

Be Cautious with Summarize or Automate Features

If you see “Summarize this page” or similar prompts, remember that you may be asking the AI to read all visible and hidden content. Don’t use such features on pages you don’t trust or that have user-generated content.

Update Promptly & Use Latest Versions

Use updates as soon as available. Fixes for known vulnerabilities are often rolled out quickly but incompletely. Staying on latest version helps.

Monitor AI Behavior

Watch what actions the browser is taking. If it clicks unexpected links, sends emails, or shows actions you didn’t expect, disable those features. Manage logs or review what data the browser retains.

Use Security Tools in Parallel

Anti-phishing tools, safe-browsing filters, password managers, and sandbox environments add layers of protection.

AI browsers are new and evolving. To protect users and build trust, several structural reforms should occur:

Strong Separation Between Untrusted Web Content and AI Command Context

The browser and the AI model must clearly isolate what is user instruction versus what is content fetched from websites. Parsing needs to be secure against hidden or embedded prompts.

Fine-Grained Permissions & Transparent Logs

Users should be able to see exactly when the AI accessed their email, calendar, tabs, or other private content. Audit logs should be user accessible and deletable.

Stricter Input Sanitization & Prompt Filtering

Prevent hidden text, comments, or obfuscated elements from being interpreted as user command. Use content sanitization and detect suspicious embedded instructions.

Privacy Policy Clarity

Clear statements about what browsing data is stored, where it's stored (local or cloud), how it's used (training, analytics, internal improvement), and with whom it's shared.

Regulation & Industry Standards

Standards for AI browsing agents: secure by default; minimal privilege; AI trust models; external audits. Regulatory oversight to ensure user safety, especially for browsers that handle sensitive tasks.

Fallback & User Controls

Let users disable automation, AI agent features, or revert to a “classic” browsing mode. Give them control over when AI can act, when it needs explicit permission.

AI browsers like Comet are likely to become more common. The benefits—automation, productivity, streamlined workflows—are real and compelling. But as they become more capable, the risks will scale.

Key factors that will determine whether these tools become safe or dangerous:

How quickly known vulnerabilities are fully sealed, and how proactively future ones are guarded against.

Whether design shifts toward safer architectures (e.g. local processing, minimal trust, sandboxed AI agents).

How transparent companies are about data collection, model behavior, and sharing.

Regulatory frameworks, industry standards, and public scrutiny. If trust erodes (due to data breaches or misuse), adoption might stall.

If done well, AI browsers might redefine what we expect from the web—more context, more assistance, safer interactions. If done poorly, they could turn browsing into a minefield of privacy leakages and automated exploits.

Perplexity’s Comet is a harbinger of browsers that do more than render pages—they assist, automate, and anticipate. That transforms the web experience—and transforms the risks. Hidden prompt injection, excessive privilege, opaque data access, and automation without oversight are not theoretical concerns; they’re already demonstrated in real experiments. For users, the convenience comes with new responsibilities. For developers, with new design challenges. And for regulators, with new kinds of threats to guard against.

The web could become smarter, but also riskier. The trade-off is being written now.

This article is for educational and informational purposes. It summarizes known vulnerabilities and security research related to AI-powered browsers such as Perplexity’s Comet, based on publicly disclosed reports. It does not represent legal advice or guarantee full coverage of risk. The technology is evolving; readers should verify current browser versions and patches before making security decisions.

Ashes Failure Puts Brendon McCullum Under Growing England Pressure

England’s Ashes loss has sparked questions over Bazball, as ECB officials review Test failures and B

Kim Jong Un Celebrates New Year in Pyongyang with Daughter Ju Ae

Kim Jong Un celebrates New Year in Pyongyang with fireworks, patriotic shows, and his daughter Ju Ae

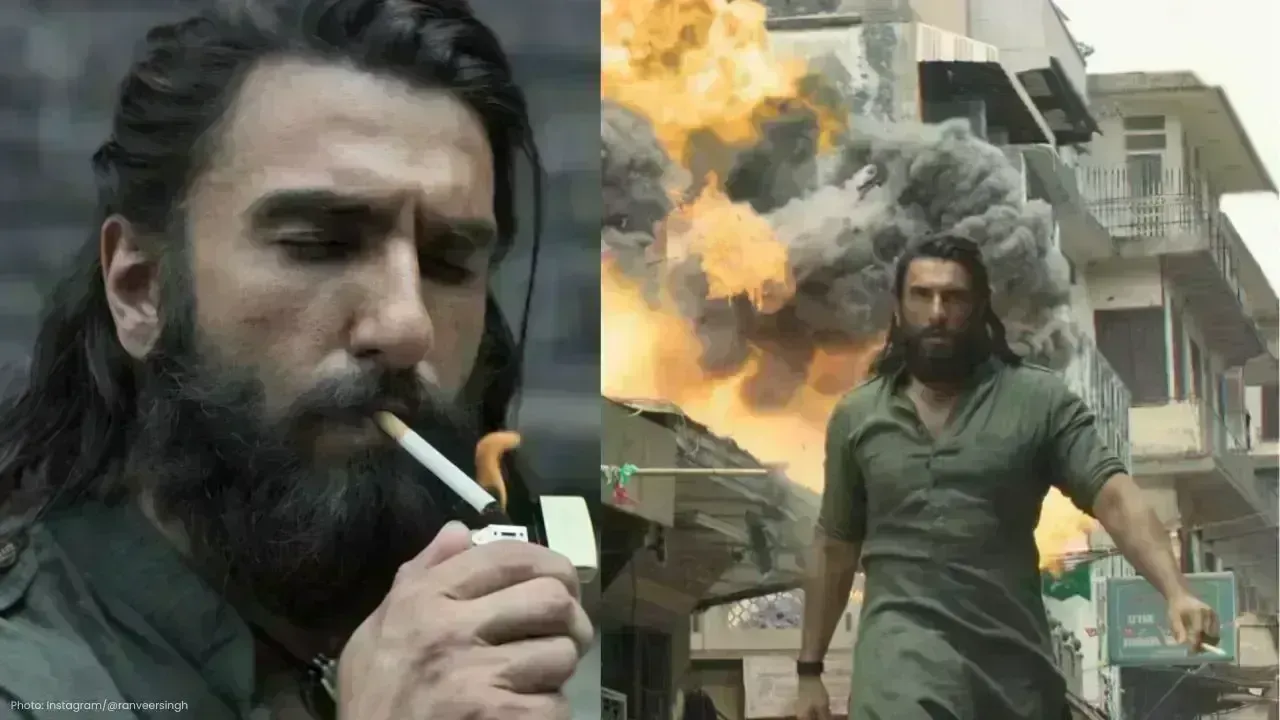

Dhurandhar Day 27 Box Office: Ranveer Singh’s Spy Thriller Soars Big

Dhurandhar earns ₹1117 crore worldwide by day 27, becoming one of 2026’s biggest hits. Ranveer Singh

Hong Kong Welcomes 2026 Without Fireworks After Deadly Fire

Hong Kong rang in 2026 without fireworks for the first time in years, choosing light shows and music

Ranveer Singh’s Dhurandhar Hits ₹1000 Cr Despite Gulf Ban Loss

Dhurandhar crosses ₹1000 crore globally but loses $10M as Gulf nations ban the film. Fans in holiday

China Claims India-Pakistan Peace Role Amid India’s Firm Denial

China claims to have mediated peace between India and Pakistan, but India rejects third-party involv