You have not yet added any article to your bookmarks!

Join 10k+ people to get notified about new posts, news and tips.

Do not worry we don't spam!

Post by : Anis Farhan

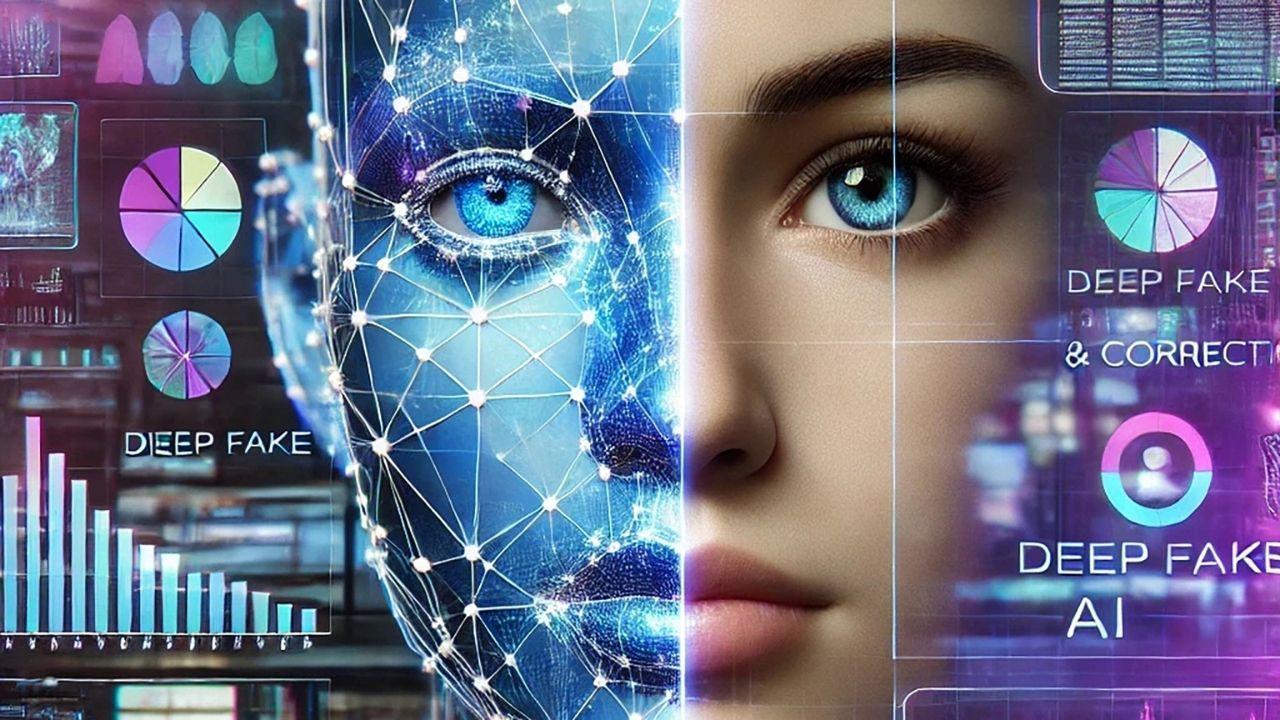

For centuries, human trust in what we see and hear has been foundational to communication. A photograph was considered evidence, a video was regarded as truth, and a recorded voice was accepted as authentic. But with the rise of deepfakes—hyper-realistic but digitally altered videos, images, or audio—the certainty of “seeing is believing” is collapsing. What once required expensive special effects teams can now be achieved with a smartphone app or open-source AI tools.

The deepfake dilemma is not just about technology—it’s about trust, governance, and society’s ability to separate reality from manipulation. Unfortunately, while deepfake technology is advancing at lightning speed, global legal frameworks are crawling at a much slower pace, leaving individuals, businesses, and even democracies vulnerable to digital deception.

Deepfakes use artificial intelligence, specifically deep learning techniques, to manipulate or generate visual and audio content that convincingly imitates real people. The term combines “deep learning” with “fake,” capturing its dual nature: a breakthrough in machine learning and a threat to truth itself.

Examples range from harmless celebrity parodies on social media to malicious creations such as fake political speeches, fabricated news interviews, and non-consensual explicit videos. The underlying AI models learn patterns of speech, movement, and appearance to replicate them so convincingly that it becomes nearly impossible for the average viewer to detect manipulation.

Not all deepfakes are inherently malicious. In entertainment, film studios use AI to de-age actors or resurrect historical figures. In education, deepfakes can recreate famous personalities for immersive learning experiences. Even in accessibility, voice cloning can help those who’ve lost speech regain a digital voice.

However, the dark side is far more concerning. Deepfakes can:

Spread misinformation during elections by fabricating candidate statements.

Be weaponized in cybercrime, where cloned voices authorize fraudulent financial transactions.

Destroy reputations by producing explicit or defamatory content.

Undermine journalism, making authentic evidence harder to defend.

The same tools that fuel creativity can also destabilize truth. This duality creates the regulatory dilemma: how to curb misuse without stifling innovation.

Legal systems across the world are struggling to keep up with the pace of deepfake development. Most existing laws were written in eras when forgery referred to physical documents, defamation meant printed words, and fraud involved face-to-face deception. Deepfakes don’t fit neatly into these traditional categories.

What exactly counts as a deepfake? Is a parody video subject to regulation? Should satire be treated differently from malicious impersonation? Legislators face difficulty defining deepfakes without unintentionally criminalizing legitimate uses such as art or comedy.

Deepfakes cross borders instantly. A fake video created in one country can go viral globally within minutes, leaving regulators powerless. International cooperation is limited, and without harmonized laws, perpetrators can exploit jurisdictional loopholes.

Most legal responses have been reactive—addressing deepfakes only after harm is caused. Victims may pursue defamation or harassment lawsuits, but by the time courts intervene, reputational damage is often irreversible.

AI advancements happen in months, while drafting, debating, and passing legislation can take years. This gap ensures that by the time a law comes into effect, it is already outdated.

Politics: In recent elections across several countries, fake videos of politicians circulated widely, sparking outrage and confusion before being debunked. Even when exposed as false, the damage to public trust lingered.

Finance: CEOs have been impersonated through voice cloning to authorize multimillion-dollar transfers. These scams exploit the credibility of familiar voices.

Personal Harm: Non-consensual explicit deepfakes, often targeting women, account for the majority of malicious deepfake content online, causing severe emotional, reputational, and psychological harm.

These cases highlight that while deepfakes may sometimes be dismissed as internet novelties, their real-world consequences are severe.

Globally, regulations remain fragmented.

United States: Some states have passed laws criminalizing non-consensual deepfake pornography or election-related deepfakes. However, there is no comprehensive federal framework yet.

European Union: The EU’s AI Act is a step toward addressing harmful AI applications, including deepfakes, requiring transparency labels. Still, enforcement and detection remain weak.

Asia: Countries like China have introduced rules requiring deepfake content to be clearly marked, but implementation varies and censorship concerns complicate enforcement.

This patchwork approach lacks consistency, allowing harmful content to slip through the cracks.

Given the difficulty of legal regulation, many experts argue that technology itself must play a central role in countering deepfakes. Detection tools powered by AI are being developed to spot inconsistencies in videos, such as unnatural blinking patterns, lighting mismatches, or audio-visual discrepancies.

However, this is a constant arms race. As detection improves, so does generation. New deepfake models become increasingly indistinguishable, raising the question of whether AI can ever truly stay ahead of its own creations.

Perhaps the most profound impact of deepfakes is psychological. When people can no longer trust what they see or hear, cynicism grows. Authentic journalism risks being dismissed as “fake news,” and manipulated propaganda gains traction. This erosion of trust is not just a technological problem but a societal one. Democracies rely on shared truths, and deepfakes threaten to erode the very foundation of informed debate.

While no single solution exists, a multi-pronged approach offers hope.

Stronger Regulations: Governments must craft laws specifically addressing malicious deepfakes, balancing freedom of expression with harm prevention.

Transparency Standards: Platforms hosting videos should require watermarking or disclosure labels for AI-generated content.

Public Awareness: Education campaigns can help citizens recognize deepfakes, reducing their manipulative power.

Corporate Responsibility: Tech companies must take proactive steps in moderating harmful deepfake content and investing in detection tools.

Ethical AI Development: Developers should be held accountable for the misuse of their tools, ensuring safeguards are built into creation platforms.

As deepfakes grow more convincing, the line between reality and fabrication will blur further. Laws may eventually catch up, but until then, individuals, corporations, and societies must adapt to this new landscape where truth is contested. The challenge lies not just in regulating technology but in preserving the very concept of authenticity in a world where digital deception is effortless.

This article is for informational and educational purposes only. It highlights the challenges posed by deepfake technology and current gaps in regulation. Readers should seek legal and professional guidance for specific cases or policy interpretations related to digital content and AI law.

Minimarkets May Supply Red and White Village Cooperatives

Indonesia’s trade minister says partnerships with minimarkets and distributors can strengthen villag

South Africa vs West Indies Clash Heats Up T20 World Cup 2026

Unbeaten South Africa and West Indies meet in a high-stakes Super 8 match at Ahmedabad, with semi-fi

Thai AirAsia Targets Growth Through China & Long-Haul Routes

Thai AirAsia aims 6-9% revenue growth in 2026 expanding domestic flights and new international route

India Ends Silent Observer Role Emerges Key Player in West Asia

From passive energy buyer to strategic partner India’s diplomacy in West Asia now commands trust inf

Indian Students Stuck In Iran Amid US-Iran Tensions And Exam Worries

Rising US-Iran tensions leave Indian students stranded, fearing missed exams could delay graduation

India Says J&K Budget Exceeds Pakistan’s IMF Bailout

India slammed Pakistan at UNHRC, stating J&K’s development budget exceeds Pakistan’s IMF bailout and